About Me

I am now a fourth year Ph.D candidate at SCUT, under the supervision of Prof. Lei Zhang. My research interests are focused on Object Perception and Understanding.

In research, we have made significant contributions to the open-set object detection field. We developed text-prompted models including Grounding DINO 1.5, visual-prompted models including T-Rex and T-Rex2, and the unified vision model DINO-X. We are also exploring the next generation of detection models, proposing MLLM-based approaches such as ChatRex, RexSeek, Rex-Thinker, and Rex-Omni.

In open source, I maintain and contribute to several impactful projects. I developed Resophy, an agentic paper reading tool that helps researchers read papers faster with AI. I created the CodeCookbook to share best practices for crafting good code. I was a core contributor to MMOCR, OpenMMLab's OCR toolbox, and maintain the Scene Text Recognition Recommendations repository, which tracks the latest papers, datasets, and SOTA methods.

In products, We have developed practical applications that bridge research and real-world impact. CountAnything is a powerful iOS app that leverages computer vision for automatic counting in industrial, agricultural, and aquaculture sectors. T-Rex Label is an intelligent online annotation tool designed for complex scenario annotation, helping users create high-quality datasets efficiently. I believe open source is the fundamental element for the sustainable development of the AI community.

Preprint

Rex-Thinker: Grounded Object Referring via Chain-of-Thought Reasoning

Qing Jiang*,

Xingyu Chen*,

Zhaoyang Zeng,

Junzhi Yu,

Lei Zhang,

[arXiv 2025] |

[Github]

[arXiv 2025] |

[Github]

ChatRex: Tamming Multimodal LLM for Joint Perception and Understanding

Qing Jiang,

Gen Luo,

Yuqin Yang,

Yihao Chen,

Yuda Xiong,

Zhaoyang Zeng*,

Tianhe Ren*,

Lei Zhang,

[arXiv 2024] |

[Github]

[arXiv 2024] |

[Github]

DINO-X: A Unified Vision Model for Open-World Object Detection and Understanding

IDEA-CVR Team

[arXiv 2024] |

[Homepage] | [Github] | [Demo] |

Grounding DINO 1.5: Advance the "Edge" of Open-Set Object Detection

Tianhe Ren*,

Qing Jiang*,

Shilong Liu*,

Zhaoyang Zeng*,

Wenlong Liu,

Han Gao,

Hongjie Huang,

Zhengyu Ma,

Xiaoke Jiang,

Yihao Chen,

Yuda Xiong,

Hao Zhang,

Feng Li,

Peijun Tang,

Kent Yu,

Lei Zhang,

[arXiv Preprint 2024] |

[Homepage] | [Github] | [Demo]

T-Rex: Counting by Visual Prompting

Qing Jiang,

Feng Li,

Tianhe Ren,

Shilong Liu,

Zhaoyang Zeng,

Kent Yu,

Lei Zhang,

[arXiv Preprint 2024] |

[Homepage] | [Github] | [Demo]

Publications

Referring to Any Person

Qing Jiang,

Lin Wu,

Zhaoyang Zeng,

Tianhe Ren,

Yuda Xiong,

Yihao Chen,

Lei Zhang,

[ICCV 2025] |

[Github]

[ICCV 2025] |

[Github]

T-Rex2: Towards Generic Object Detection via Text-Visual Prompt Synergy

Qing Jiang,

Feng Li,

Zhaoyang Zeng,

Tianhe Ren,

Shilong Liu,

Lei Zhang.

[ECCV 2024] |

[Homepage] | [Github] | [Demo] |

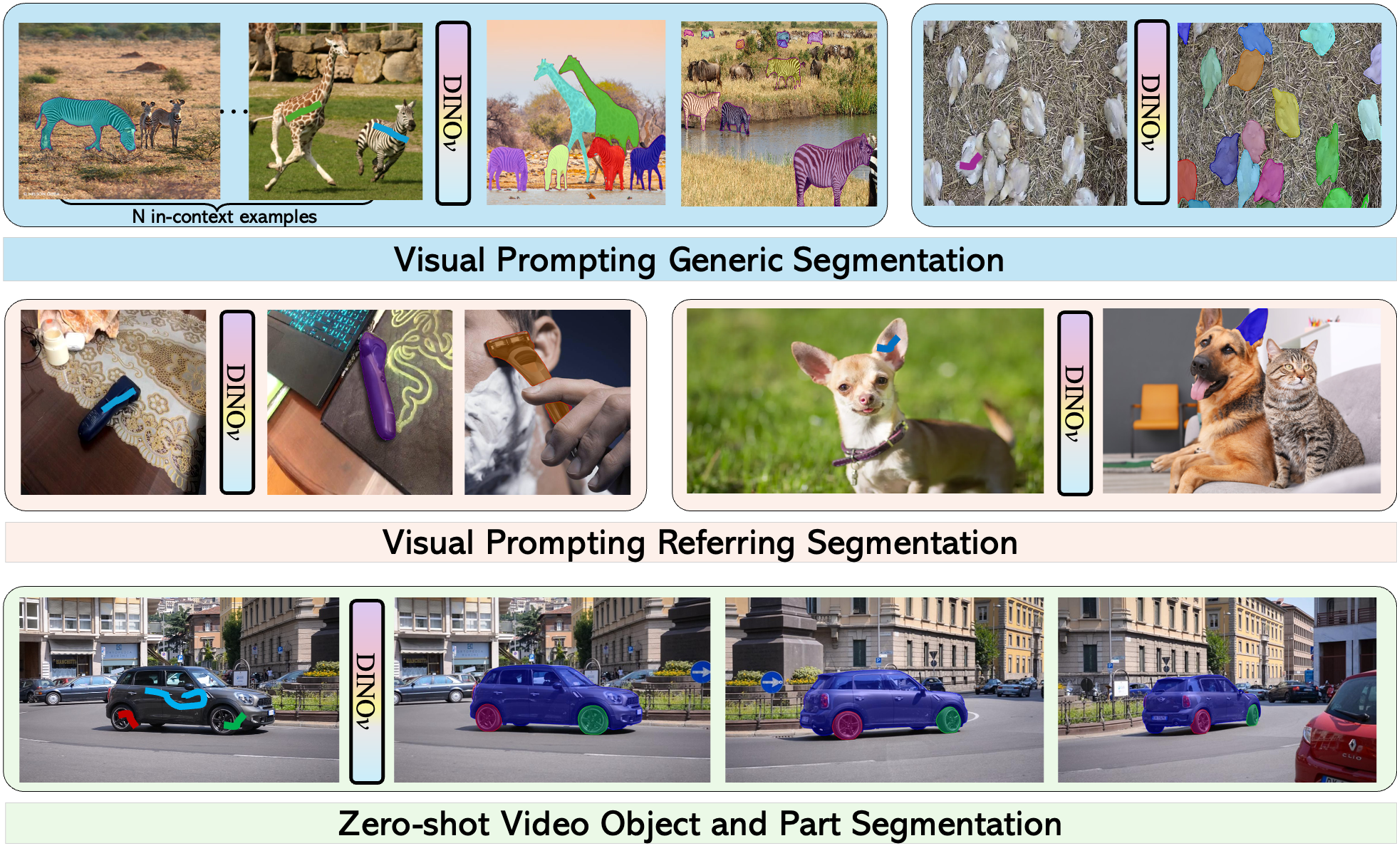

Visual In-Context Prompting

Feng Li, Qing Jiang, Hao Zhang, Tianhe Ren, Shilong Liu, Xueyan Zou, Huaizhe Xu, Hongyang Li Chunyuan Li jianwei Yang Lei Zhang Jianfeng Gao

[CVPR 2024] |

[Code] |

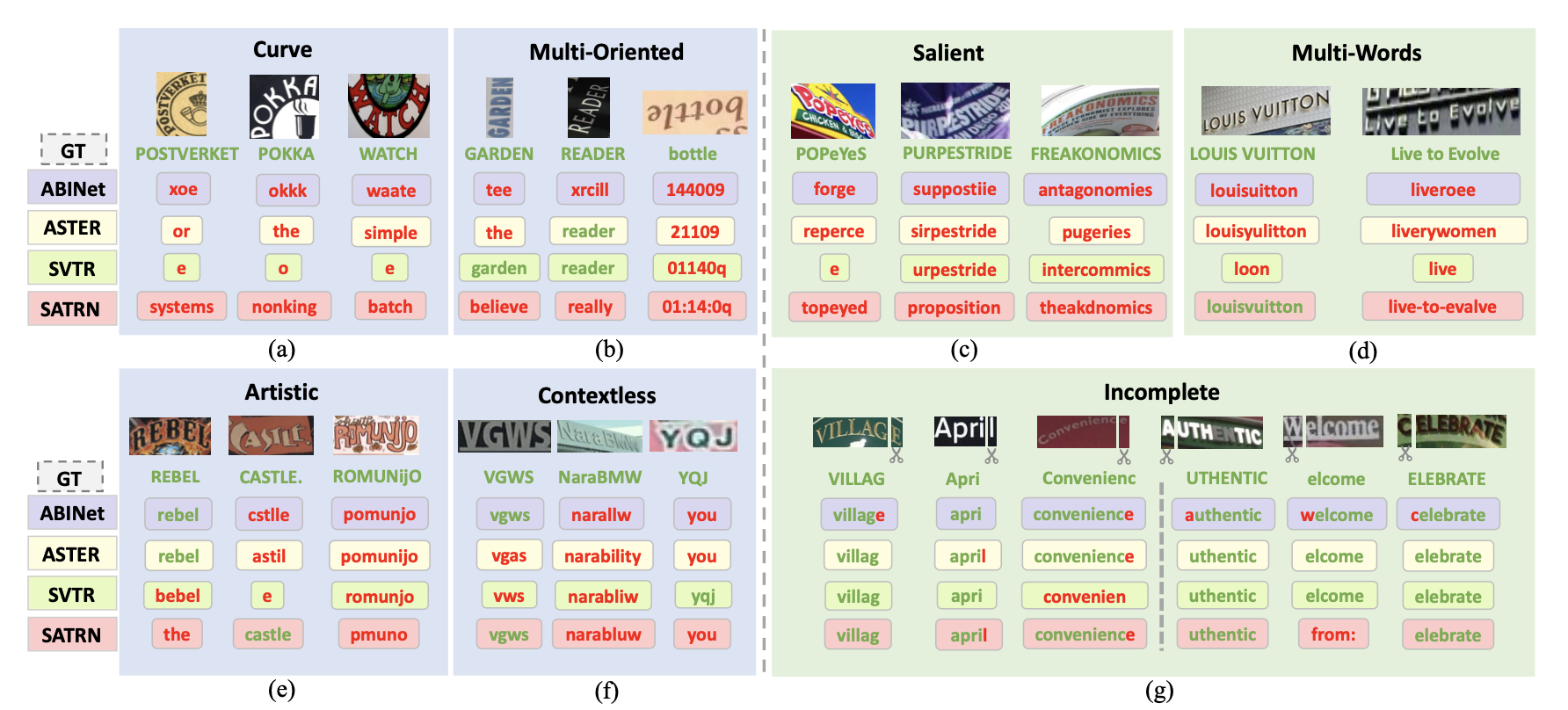

Revisiting Scene Text Recognition: A Data Perspective

Qing Jiang, Jiapeng Wang, Dezhi Peng, Chongyu Liu, Lianwen Jin

[ICCV 2023] |

[Homepage] | [Code] | [Demo] |

Experience

| International Digital Economy Academy (IDEA) | Research intern | 2023.06 – now |

| Shanghai AI Lab (OpenMMLab) | Intern | 2022.02 – 2022.08 |